Journal Publications

G. D’Innocenzo, S. Della Sala and M.I. Coco (2022). Similar mechanisms of temporary bindings for identity and location of objects in healthy ageing: An eye-tracking study with naturalistic scenes. Scientific Reports 12, 11163 (2022). DOI: 10.1038/s41598-022-13559-6

ABSTRACT

The ability to maintain visual working memory (VWM) associations about the identity and location of objects has at times been found to decrease with age. To date, however, this age-related difficulty was mostly observed in artificial visual contexts (e.g., object arrays), and so it is unclear whether it may manifest in naturalistic contexts, and in which ways. In this eye-tracking study, 26 younger and 24 healthy older adults were asked to detect changes in a critical object situated in a photographic scene (192 in total), about its identity (the object becomes a different object but maintains the same position), location (the object only changes position) or both (the object changes in location and identity). Aging was associated with a lower change detection performance. A change in identity was harder to detect than a location change, and performance was best when both features changed, especially in younger adults. Eye movements displayed minor differences between age groups (e.g., shorter saccades in older adults) but were similarly modulated by the type of change. Latencies to the first fixation were longer and the amplitude of incoming saccades was larger when the critical object changed in location. Once fixated, the target object was inspected for longer when it only changed in identity compared to location. Visually salient objects were fixated earlier, but saliency did not affect any other eye movement measures considered, nor did it interact with the type of change. Our findings suggest that even though aging results in lower performance, it does not selectively disrupt temporary bindings of object identity, location, or their association in VWM, and highlight the importance of using naturalistic contexts to discriminate the cognitive processes that undergo detriment from those that are instead spared by aging.

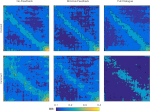

M.I. Coco, D. Mønster, G. Leonardi, R. Dale and S.Wallot (2021). Unidimensional and multidimensional methods for recurrence quantification analysis with crqa. The R-Journal, 13(1), 145-163. DOI: 10.32614/RJ-2021-062

ABSTRACT

Recurrence quantification analysis is a widely used method for characterizing patterns in time series. This article presents a comprehensive survey for conducting a wide range of recurrence based analyses to quantify the dynamical structure of single and multivariate time series and capture coupling properties underlying leader-follower relationships. The basics of recurrence quantification analysis (RQA) and all its variants are formally introduced step-by-step from the simplest auto recurrence to the most advanced multivariate case. Importantly, we show how such RQA methods can be deployed under a single computational framework in R using a substantially renewed version of our crqa 2.0 package. This package includes implementations of several recent advances in recurrence based analysis, among them applications to multivariate data and improved entropy calculations for categorical data. We show concrete applications of our package to example data, together with a detailed description of its functions and some guidelines on their usage.

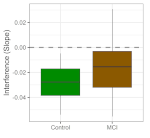

M. I. Coco, G. Merendino, G. Zappala and S. Della Sala (2021). Semantic interference

mechanisms on long-term visual memory and their eye-movement signatures in

Mild Cognitive Impairment. Neuropsychology, 35(5), 498-513, DOI: 10.1037/neu0000734

ABSTRACT

Similarity-based semantic interference (SI) hinders memory recognition. Within long-term visual memory paradigms, the more scenes (or objects) from the same semantic category are viewed, the harder it is to recognize each individual instance. A growing body of evidence shows that overt attention is intimately linked to memory. However, it is yet to be understood whether SI mediates overt attention during scene encoding, and so explain its detrimental impact on recognition memory. In the current experiment, participants watched 372 photographs belonging to different semantic categories (e.g., a kitchen) with different frequency (4, 20, 40 or 60 images), while being eye-tracked. After 10 minutes, they were presented with the same 372 photographs plus 372 new photographs and asked whether they recognized (or not) each photo (i.e., old/new paradigm).We found that the more the SI, the poorer the recognition performance, especially for old scenes of which memory representations existed. Scenes more widely explored were better recognized, but for increasing SI, participants focused on more local regions of the scene in search for its potentially distinctive details. Attending to the centre of the display, or to scene regions rich in low-level saliency was detrimental to recognition accuracy, and as SI increased participants were more likely to rely on visual saliency. The complexity of maintaining faithful memory representations for increasing SI also manifested in longer fixation durations; in fact, a more successful encoding was also associated with shorter fixations. Our study highlights the interdependence between attention and memory during high-level processing of semantic information.

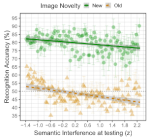

A. Mikhailova, A. Raposo, S. Della Sala and M. I. Coco (2021). Eye-movements reveal semantic interference effects during the encoding of naturalistic scenes in long-term memory. Psychonomic Bulletin & Review 28 (5), 1601-1614 DOI: 10.3758/s13423-021-01920-1

ABSTRACT

Similarity-based semantic interference (SI) hinders memory recognition. Within long-term visual memory paradigms, the more scenes (or objects) from the same semantic category are viewed, the harder it is to recognize each individual instance. A growing body of evidence shows that overt attention is intimately linked to memory. However, it is yet to be understood whether SI mediates overt attention during scene encoding, and so explain its detrimental impact on recognition memory. In the current experiment, participants watched 372 photographs belonging to different semantic categories (e.g., a kitchen) with different frequency (4, 20, 40 or 60 images), while being eye-tracked. After 10 minutes, they were presented with the same 372 photographs plus 372 new photographs and asked whether they recognized (or not) each photo (i.e., old/new paradigm).We found that the more the SI, the poorer the recognition performance, especially for old scenes of which memory representations existed. Scenes more widely explored were better recognized, but for increasing SI, participants focused on more local regions of the scene in search for its potentially distinctive details. Attending to the centre of the display, or to scene regions rich in low-level saliency was detrimental to recognition accuracy, and as SI increased participants were more likely to rely on visual saliency. The complexity of maintaining faithful memory representations for increasing SI also manifested in longer fixation durations; in fact, a more successful encoding was also associated with shorter fixations. Our study highlights the interdependence between attention and memory during high-level processing of semantic information.

F. Cimminella, G. D’Innocenzo, S. Della Sala, A. Iavarone, C. Musella, and M.I. Coco (2021).

Preserved extra-foveal processing of object semantics in Alzheimer’s disease. Journal of Geriatric Psychiatry and Neurology, 35 (3), 418-433, DOI: 10.1177/08919887211016056

ABSTRACT

Alzheimer’s disease (AD) patients underperform on a range of tasks requiring semantic processing, but it is unclear whether this impairment is due to a generalised loss of semantic knowledge or to issues in accessing and selecting such information from memory. The objective of this eye-tracking visual search study was to determine whether semantic expectancy mechanisms known to support object recognition in healthy adults are preserved in AD patients. Furthermore, as AD patients are often reported to be impaired in accessing information in extra-foveal vision, we investigated whether that was also the case in our study. Twenty AD patients and 20 age-matched controls searched for a target object among an array of distractors presented extra-foveally. The distractors were either semantically related or unrelated to the target (e.g., a car in an array with other vehicles or kitchen items). Results showed that semantically related objects were detected with more difficulty than semantically unrelated objects by both groups, but more markedly by the AD group. Participants looked earlier and for longer at the critical objects when these were semantically unrelated to the distractors. Our findings show that AD patients can process the semantics of objects and access it in extra-foveal vision. This suggests that their impairments in semantic processing may reflect difficulties in accessing semantic information rather than a generalised loss of semantic memory.

M. Pagnotta, K. Laland and M.I. Coco (2020) Attentional coordination in demonstrator-observer dyads facilitates learning and predicts performance in a novel manual task. Cognition 201(104314). DOI:10.1016/j.cognition.2020.104314

ABSTRACT

Observational learning is a form of social learning in which a demonstrator performs a target task in the

company of an observer, who may as a consequence learn something about it. In this study, we approach social

learning in terms of the dynamics of coordination rather than the more common perspective of transmission of

information. We hypothesised that observers must continuously adjust their visual attention relative to the

demonstrator’s time-evolving behaviour to benefit from it. We eye-tracked observers repeatedly watching videos

showing a demonstrator solving one of three manipulative puzzles before attempting at the task. The presence of

the demonstrator’s face and the availability of his verbal instruction in the videos were manipulated. We then

used recurrence quantification analysis to measure the dynamics of coordination between the overt attention of

the observers and the demonstrator’s manipulative actions. Bayesian hierarchical logistic regression was applied

to examine (1) whether the observers’ performance was predicted by such indexes of coordination, (2) how

performance changed as they accumulated experience, and (3) if the availability of speech and intentional gaze

of the demonstrator mediated it. Results showed that learners better able to coordinate their eye movements

with the manipulative actions of the demonstrator had an increasingly higher probability of success in solving

the task. The availability of speech was beneficial to learning, whereas the presence of the demonstrator’s face

was not. We argue that focusing on the dynamics of coordination between individuals may greatly improve

understanding of the cognitive processes underlying social learning.

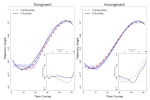

M.I. Coco, A. Nuthmann and O. Dimigen (2020) Fixation-related brain potential during

semantic integration of object-scene information. Journal of Cognitive Neuroscience 32(4), 571-589. DOI:10.1162/jocn a 01504

ABSTRACT

In vision science, a particularly controversial topic is whether

and how quickly the semantic information about objects is available outside foveal vision. Here, we aimed at contributing to this debate by coregistering eye movements and EEG while participants viewed photographs of indoor scenes that contained a semantically consistent or inconsistent target object. Linear deconvolution modeling was used to analyze the ERPs evoked by scene onset as well as the fixation-related potentials (FRPs) elicited by the fixation on the target object (t) and by the preceding fixation (t − 1). Object–scene consistency did not influence the probability of immediate target fixation or the ERP evoked by scene onset, which suggests that object–scene semantics was not accessed immediately. However, during the subsequent scene exploration, inconsistent objects were prioritized over consistent objects in extrafoveal vision (i.e., looked at earlier) and were more effortful to process in foveal vision (i.e., looked at longer). In FRPs, we demonstrate a fixation-related N300/N400 effect, whereby inconsistent objects elicit a larger frontocentral negativity than consistent objects. In line with the behavioral findings, this effect was already seen in FRPs aligned to the pretarget fixation t − 1 and persisted throughout fixation t, indicating that the extraction of object semantics can already begin in extrafoveal vision. Taken together, the results emphasize the usefulness of combined EEG/eye movement recordings for understanding the mechanisms of object–scene integration during natural viewing.

F. Cimminella, S. Della Sala and M.I. Coco (2020) Extra-foveal processing of object

semantics guides early overt attention during visual search. Attention, Perception & Psychophysics, 82, 655-670. DOI: 10.3758/s13414-019-01906-1

ABSTRACT

Eye-tracking studies using arrays of objects have demonstrated that some high-level processing of object semantics can occur in extra-foveal vision, but its role on the allocation of early overt attention is still unclear. This eye-tracking visual search study contributes novel findings by examining the role of object-to-object semantic relatedness and visual saliency on search responses and eye-movement behaviour across arrays of increasing size (3, 5, 7). Our data show that a critical object was looked at earlier and for longer when it was semantically unrelated than related to the other objects in the display, both when it was the search target (target-present trials) and when it was a target’s semantically related competitor (target-absent trials). Semantic relatedness effects manifested already during the very first fixation after array onset, were consistently found for increasing set sizes, and were independent of low-level visual saliency, which did not play any role. We conclude that object semantics can be extracted early in extra-foveal vision and capture overt attention from the very first fixation. These findings pose a challenge to models of visual attention which assume that overt attention is guided by the visual appearance of stimuli, rather than by their semantics

M.T. Borges, E.G, Fernandes and M.I. Coco (2020) Age-related differences during visual

search: the role of contextual expectations and top-down control mechanisms. Aging, Neuropsychology and Cognition, 27(4), 489-516 DOI: 110.1080/13825585.2019.1632256

ABSTRACT

During the visual search, cognitive control mechanisms activate to inhibit distracting information and efficiently orient attention towards contextually relevant regions likely to contain the search target. Cognitive ageing is known to hinder cognitive control mechanisms, however little is known about their interplay with contextual expectations, and their role in visual search. In two eye-tracking experiments, we compared the performance of a younger and an older group of participants searching for a target object varying in semantic consistency with the search scene (e.g., a basket of bread vs. a clothes iron in a restaurant scene) after being primed with contextual information either congruent or incongruent with it (e.g., a restaurant vs. a bathroom). Primes were administered either as scenes (Experiment 1) or words (Experiment 2, which included scrambled words as neutral primes). Participants also completed two inhibition tasks (Stroop and Flanker) to assess their cognitive control. Older adults had greater difficulty than younger adults when searching for inconsistent objects, especially when primed with congruent information (Experiment 1), or a scrambled word (neutral condition, Experiment 2). When the target object violates the semantics of the search context, congruent expectations or perceptual distractors, have to be suppressed through cognitive control, as they are irrelevant to the search. In fact, higher cognitive control, especially in older participants, was associated with better target detection in these more challenging conditions, although it did not influence eye-movement responses. These results shed new light on the links between cognitive control, contextual expectations and visual attention in healthy ageing.

E.G., Fernandes, M.I. Coco and H.P. Branigan (2019) When Eye Fixation might not reflect Online Ambiguity Resolution: A study on Structural Priming using the Visual-World Paradigm in Portuguese. Journal of Cultural Cognitive Science, 3, 65–87 DOI: 10.1007/s41809-019-00021-9

ABSTRACT

Research on structural priming in the visual-world paradigm (VWP) has examined how visual referents are looked at when participants are repeatedly exposed to sentences with the same or a different syntactic structure. A core finding is that participants look more at a visual referent when it is consistent with the primed interpretation. In this study, we examine the hypothesis that by using multiple primes, we should induce a stronger structural preference, and hence, observe more looks to the visual referent that is consistent with the interpretation of the primed structure. In three VWP eye-tracking experiments, Portuguese speakers were asked to read aloud one, two or three relative clause (RC) sentences that were morphologically disambiguated towards a high- or low- attachment reading. Then, they were presented with a visual display and listened to an ambiguous RC. Listeners fixated more the referent consistent with the primed attachment after one prime, but unexpectedly looked more at the referent consistent with the non–primed attachment following two and three primes. In a fourth experiment, we assessed the gaze pattern during unambiguous RC processing, and found a consistent preference for looking at the non-antecedent referent. Our experiments show that exposure to multiple primes can lead to fewer looks to the primed antecedent. Moreover, people do not seem to always look at the antecedent consistent with the attachment, suggesting that the link between attending to visual information and understanding spoken information may not be straightforward.

N.F., Duarte, J., Tasevski, M.,Rakovic, M.I. Coco, A. Billard and J. Santos-Victor, (2018) Action Reading: Anticipating the Intentions of Humans and Robots. IEEE Robotics and Automation Letters, 3(4), 2377-3766, DOI:10.1109/LRA.2018.2861569

ABSTRACT

Humans have the fascinating capacity of processing nonverbal visual cues to understand and anticipate the actions of other humans. This “intention reading” ability is underpinned by shared motor repertoires and action models, which we use to interpret the intentions of others as if they were our own. We investigate how different cues contribute to the legibility of human actions during interpersonal interactions. Our first contribution is a publicly available dataset with recordings of human body motion and eye gaze, acquired in an experimental scenario with an actor interacting with three subjects. From these data, we conducted a human study to analyze the importance of different nonverbal cues for action perception. As our second contribution, we used motion/gaze recordings to build a computational model describing the interaction between two persons. As a third contribution, we embedded this model in the controller of an iCub humanoid robot and conducted a second human study, in the same scenario with the robot as an actor, to validate the model’s “intention reading” capability. Our results show that it is possible to model (nonverbal) signals exchanged by humans during interaction, and how to incorporate such a mechanism in robotic systems with the twin goal of being able to “read” human action intentions and acting in a way that is legible by humans.

M., Garraffa, M.I. Coco and H.P. Branigan (2018) Impaired implicit learning of syntactic structure in children with developmental language disorder: Evidence from syntactic priming. Autism & Developmental Language Impairments. DOI: 10.1177/2396941518779939

ABSTRACT

Background and aims

Methods

Results

Conclusions

Implications

M.I. Coco, R. Dale and F. Keller (2018) Performance in a Collaborative Search Task: the

Role of Feedback and Cognitive Alignment. Topics in Cognitive Science, 10(1) DOI: 10.1111/tops.12300

ABSTRACT

When people communicate, they coordinate a wide range of linguistic and non-linguistic behaviors. This process of coordination is called alignment, and it is assumed to be fundamental to successful communication. In this paper, we question this assumption and investigate whether disalignment is a more successful strategy in some cases. More specifically, we hypothesize that alignment correlates with task success only when communication is interactive. We present results from a spot-the-difference task in which dyads of interlocutors have to decide whether they are viewing the same scene or not. Interactivity was manipulated in three conditions by increasing the amount of information shared between interlocutors (no exchange of feedback, minimal feedback, full dialogue). We use recurrence quantification analysis to measure the alignment between the scan-patterns of the interlocutors. We found that interlocutors who could not exchange feedback aligned their gaze more, and that increased gaze alignment correlated with decreased task success in this case. When feedback was possible, in contrast, interlocutors utilized it to better organize their joint search strategy by diversifying visual attention. This is evidenced by reduced overall alignment in the minimal feedback and full dialogue conditions. However, only the dyads engaged in a

full dialogue increased their gaze alignment over time to achieve successful performances. These results suggest that alignment per se does not imply communicative success, as most models of dialogue assume.

Rather, the effect of alignment depends on the type of alignment, on the goals of the task, and on the

presence of feedback.

M.I. Coco, S. Araujo, and K.M. Petersson (2017) Disentangling Stimulus Plausibility and Contextual Congruency: Electro-Physiological Evidence for Differential Cognitive Dynamics. Neuropsychologia, 96, 50-163, DOI: 10.1016/j.neuropsychologia.2016.12.008

ABSTRACT

Expectancy mechanisms are routinely used by the cognitive system in stimulus processing and in anticipation of appropriate responses. Electrophysiology research has documented negative shifts of brain activity when expectancies are violated within a local stimulus context (e.g., reading an implausible word in a sentence) or more globally between consecutive stimuli (e.g., a narrative of images with an incongruent end). In this EEG study, we examine the interaction between expectancies operating at the level of stimulus plausibility and at more global level of contextual congruency to provide evidence for, or against, a disassociation of the underlying processing mechanisms. We asked participants to verify the congruency of pairs of cross-modal stimuli (a sentence and a scene), which varied in plausibility. ANOVAs on ERP amplitudes in selected windows of interest show that congruency violation has longer-lasting (from 100 to 500 ms) and more widespread effects than plausibility violation (from 200 to 400 ms). We also observed critical interactions between these factors, whereby incongruent and implausible pairs elicited stronger negative shifts than their congruent counterpart, both early on (100–200 ms) and between 400–500 ms. Our results suggest that the integration mechanisms are sensitive to both global and local effects of expectancy in a modality independent manner. Overall, we provide novel insights into the interdependence of expectancy during meaning integration of cross-modal stimuli in a verification task.

C. Souza, M.I. Coco, S. Pinho, C.N. Filipe and J.C. Carmo (2016) Contextual effects on visual short-term memory in High Functioning Autism Spectrum Disorder. Research in Autism Spectrum Disorder, 32, 64-69, DOI: 10.1016/j.rasd.2016.09.003

ABSTRACT

Background

According to the context blindness hypothesis (Vermeulen, 2012) individuals with autism spectrum disorders (ASD) experience difficulties in processing contextual information. This study re-evaluates this hypothesis by examining the influence exerted by contextual information on visual short-term memory.

Method

In a visual short-term memory task, we test high-functioning individuals with ASD (N = 21) and a typically developed (TD) group (N = 25) matched on age, education and IQ. In this task, participants are exposed to scenes (e.g., the photo of a restaurant), then shown a target-object that is manipulated according to its contextual Consistency with the scene (e.g., a loaf of bread versus an iron) and finally asked whether they saw the target-object or not.

Results

The response accuracy was differentially mediated by the Consistency of the target-object for both the ASD and TD groups. In particular, individuals with ASD experienced more difficulty in identifying an inconsistent target when it was present in the scene. Moreover, when a consistent object was absent from the scene, individuals with ASD were more likely to wrongly state its presence than TD individuals.

Conclusions

Our results challenge a strict interpretation of the context blindness hypothesis by demonstrating that individuals with ASD are as sensitive as TD individuals to contextual information. Individuals with ASD, however, appear to use contextual information differently than TD individuals, as they seem to rely more on consolidated contextual expectations than the TD group. These findings could drive the development of novel expectancy-based teaching strategies.

M. I. Coco, L. Badino, P. Cipresso, A. Chirico, E, Ferrari, G. Riva, A. Gaggioli, A. D’Ausilio (2016) Multiscale behavioral synchronization in a joint tower-building task. IEEE: Transactions on Cognitive and Developmental Systems, 99, DOI: 10.1109/TCDS.2016.2545739

ABSTRACT

Human to human sensorimotor interaction can only be fully understood by modeling the patterns of bodily synchronization and reconstructing the underlying mechanisms of optimal cooperation. We designed a tower-building task to address such a goal. We recorded upper body kinematics of dyads and focused on the velocity profiles of the head and wrist. We applied recurrence quantification analysis to examine the dynamics of synchronization within, and across the experimental trials, to compare the roles of leader and follower. Our results show that the leader was more auto-recurrent than the follower to make his/her behavior more predictable. When looking at the cross-recurrence of the dyad, we find different patterns of synchronization for head and wrist motion. On the wrist, dyads synchronized at short lags, and such a pattern was weakly modulated within trials, and invariant across them. Head motion, instead, synchronized at longer lags and increased both within and between trials: a phenomenon mostly driven by the leader. Our findings point at a multilevel nature of human to human sensorimotor synchronization, and may provide an experimentally solid benchmark to identify the basic primitives of motion, which maximize behavioral coupling between humans and artificial agents.

M.I. Coco, N. Duran (2016). When Expectancies Collide: Action Dynamics reveal the

Interaction of Plausibility and Congruency, Psychonomic Bulletin and Review, 23(6), 1920-1931. DOI: 10.3758/s13423-016-1022-9

ABSTRACT

The cognitive architecture routinely relies on expectancy mechanisms to process the plausibility of stimuli and establish their sequential congruency. In two computer mouse-tracking experiments, we use a cross-modal verification task to uncover the interaction between plausibility and congruency by examining their temporal signatures of activation competition as expressed in a computer- mouse movement decision response. In this task, participants verified the content congruency of sentence and scene pairs that varied in plausibility. The order of presentation (sentence-scene, scene-sentence) was varied between participants to uncover any differential processing. Our results show that implausible but congruent stimuli triggered less accurate and slower responses than implausible and incongruent stimuli, and were associated with more complex angular mouse trajectories independent of the order of presentation. This study provides novel evidence of a disassociation between the temporal signatures of plausibility and congruency detection on decision responses.

M.I. Coco, F. Keller, G.L. Malcolm (2016) Anticipation in Real-world Scenes: The Role of

Visual Context and Visual Memory, Cognitive Science, 40,1995-2024, DOI: 10.1111/cogs.12313

ABSTRACT

The human sentence processor is able to make rapid predictions about upcoming linguistic input. For example, upon hearing the verb eat, anticipatory eye-movements are launched toward edible objects in a visual scene (Altmann & Kamide, 1999). However, the cognitive mechanisms that underlie anticipation remain to be elucidated in ecologically valid contexts. Previous research has, in fact, mainly used clip-art scenes and object arrays, raising the possibility that anticipatory eye-movements are limited to displays containing a small number of objects in a visually impoverished context. In Experiment 1, we confirm that anticipation effects occur in real-world scenes and investigate the mechanisms that underlie such anticipation. In particular, we demonstrate that real-world scenes provide contextual information that anticipation can draw on: When the target object is not present in the scene, participants infer and fixate regions that are contextually appropriate (e.g., a table upon hearing eat). Experiment 2 investigates whether such contextual inference requires the co-presence of the scene, or whether memory representations can be utilized instead. The same real-world scenes as in Experiment 1 are presented to participants, but the scene disappears before the sentence is heard. We find that anticipation occurs even when the screen is blank, including when contextual inference is required. We conclude that anticipatory language processing is able to draw upon global scene representations (such as scene type) to make contextual inferences. These findings are compatible with theories assuming contextual guidance, but posit a challenge for theories assuming object-based visual indices.

M.I. Coco and F. Keller (2015) Integrating Mechanisms of Visual Guidance in Naturalistic

Language Production, Cognitive Processing, 16(2), 131-150. DOI: 10.1007/s10339-014-

0642-0.

ABSTRACT

Situated language production requires the integration of visual attention and linguistic processing. Previous work has not conclusively disentangled the role of perceptual scene information and structural sentence information in guiding visual attention. In this paper, we present an eye-tracking study that demonstrates that three types of guidance, perceptual, conceptual, and structural, interact to control visual attention. In a cued language production experiment, we manipulate perceptual (scene clutter) and conceptual guidance (cue animacy) and measure structural guidance (syntactic complexity of the utterance). Analysis of the time course of language production, before and during speech, reveals that all three forms of guidance affect the complexity of visual responses, quantified in terms of the entropy of attentional landscapes and the turbulence of scan patterns, especially during speech. We find that perceptual and conceptual guidance mediate the distribution of attention in the scene, whereas structural guidance closely relates to scan pattern complexity. Furthermore, the eye–voice span of the cued object and its perceptual competitor are similar; its latency mediated by both perceptual and structural guidance. These results rule out a strict interpretation of structural guidance as the single dominant form of visual guidance in situated language production. Rather, the phase of the task and the associated demands of cross-modal cognitive processing determine the mechanisms that guide attention.

M. Garraffa, M.I. Coco and H.P. Branigan (2015) Effects of Immediate and Cumulative

Syntactic Experience in Language Impairment: Evidence from Priming of Subject Relatives in Children with SLI, Language Learning and Development, 11(1), 18-40. DOI: 10.1080/15475441.2013.876277

ABSTRACT

Background and aims

Methods

Results

Conclusions

M.I. Coco and F. Keller (2015) The Interaction of Visual and Linguistic Saliency during Syntactic Ambiguity Resolution, Quarterly Journal of Experimental Psychology, 68(1), 46-74. DOI: 10.1080/17470218.2014.936475

ABSTRACT

Psycholinguistic research using the visual world paradigm has shown that the processing of sentences is constrained by the visual context in which they occur. Recently, there has been growing interest in the interactions observed when both language and vision provide relevant information during sentence processing. In three visual world experiments on syntactic ambiguity resolution, we investigate how visual

and linguistic information influence the interpretation of ambiguous sentences. We hypothesize that (1) visual and linguistic information both constrain which interpretation is pursued by the sentence processor, and (2) the two types of information act upon the interpretation of the sentence at different points during processing. In Experiment 1, we show that visual saliency is utilized to anticipate the upcoming arguments of a verb. In Experiment 2, we operationalize linguistic saliency using international breaks and demonstrate that these give prominence to linguistic referents. These results confirm prediction (1). In Experiment 3, we manipulate visual and linguistic saliency together and find that both types of information are used, but at different points in the sentence, to incrementally update its current interpretation. This finding is consistent with prediction (2). Overall, our results suggest an adaptive processing architecture in which different types of information are used when they become available, optimizing different aspects of situated language processing.

M.I. Coco and R. Dale (2014) Cross-Recurrence Quantification Analysis of Categorical

and Continuous Time-Series: an R-Package. Frontiers in Psychology, 5:510. DOI: 10.3389/fpsyg.2014.00510

ABSTRACT

This paper describes the R package crqa to perform cross-recurrence quantification analysis of two time series of either a categorical or continuous nature. Streams of behavioral information, from eye movements to linguistic elements, unfold over time. When two people interact, such as in conversation, they often adapt to each other, leading these behavioral levels to exhibit recurrent states. In dialog, for example, interlocutors adapt to each other by exchanging interactive cues: smiles, nods, gestures, choice of words, and so on. In order for us to capture closely the goings-on of dynamic interaction, and uncover the extent of coupling between two individuals, we need to quantify how much recurrence is taking place at these levels. Methods available in crqa would allow researchers in cognitive science to pose such questions as how much are two people recurrent at some level of analysis, what is the characteristic lag time for one person to maximally match another, or whether one person is leading another. First, we set the theoretical ground to understand the difference between “correlation” and “co-visitation” when comparing two time series, using an aggregative or cross-recurrence approach. Then, we describe more formally the principles of cross-recurrence, and show with the current package how to carry out analyses applying them. We end the paper by comparing computational efficiency, and results’ consistency, of crqa R package, with the benchmark MATLAB toolbox crptoolbox (Marwan, 2013). We show perfect comparability between the two libraries on both levels.

M.I. Coco, G.L. Malcolm and F. Keller (2014) The Interplay of Bottom-Up and Top-

Down Mechanisms in Visual Guidance during Object Naming, The Quarterly Journal of

Experimental Psychology, 67(6):1096-1120. DOI: 10.1080/17470218.2013.844843

ABSTRACT

An ongoing issue in visual cognition concerns the roles played by low- and high-level information in guiding visual attention, with current research remaining inconclusive about the interaction between the two. In this study, we bring fresh evidence into this long-standing debate by investigating visual saliency and contextual congruency during object naming (Experiment 1), a task in which visual processing interacts with language processing. We then compare the results of this experiment to data of a memorization task using the same stimuli (Experiment 2). In Experiment 1, we find that both saliency and congruency influence visual and naming responses and interact with linguistic factors. In particular, incongruent objects are fixated later and less often than congruent ones. However, saliency is a significant predictor of object naming, with salient objects being named earlier in a trial. Furthermore, the saliency and congruency of a named object interact with the lexical frequency of the associated word and mediate the time-course of fixations at naming. In Experiment 2, we find a similar overall pattern in the eye-movement responses, but only the congruency of the target is a significant predictor, with incongruent targets fixated less often than congruent targets. Crucially, this finding contrasts with claims in the literature that incongruent objects are more informative than congruent objects by deviating from scene context and hence need a longer processing. Overall, this study suggests that different sources of information are interactively used to guide visual attention on the targets to be named and raises new questions for existing theories of visual attention.

A.D.F. Clarke, M.I. Coco and F. Keller (2013). The Impact of Attentional, Linguistic and

Visual Features during Object Naming, Frontiers in Psychology, 4:927. DOI: 10.3389/fpsyg.2013.00927

ABSTRACT

Object detection and identification are fundamental to human vision, and there is mounting evidence that objects guide the allocation of visual attention. However, the role of objects in tasks involving multiple modalities is less clear. To address this question, we investigate object naming, a task in which participants have to verbally identify objects they see in photorealistic scenes. We report an eye-tracking study that investigates which features (attentional, visual, and linguistic) influence object naming. We find that the amount of visual attention directed toward an object, its position and saliency, along with linguistic factors such as word frequency, animacy, and semantic proximity, significantly influence whether the object will be named or not. We then ask how features from different modalities are combined during naming, and find significant interactions between saliency and position, saliency and linguistic features, and attention and position. We conclude that when the cognitive system performs tasks such as object naming, it uses input from one modality to constraint or enhance the processing of other modalities, rather than processing each input modality independently.

M.I. Coco and F. Keller, (2012) Scan Patterns Predict Sentence Production in the

Cross-Modal Processing of Visual Scenes. Cognitive Science, 36(7), 1204-1223. DOI:

10.1111/j.1551-6709.2012.01246.x

ABSTRACT

Most everyday tasks involve multiple modalities, which raises the question of how the processing of these modalities is coordinated by the cognitive system. In this paper, we focus on the coordination of visual attention and linguistic processing during speaking. Previous research has shown that objects in a visual scene are fixated before they are mentioned, leading us to hypothesize that the scan pattern of a participant can be used to predict what he or she will say. We test this hypothesis using a data set of cued scene descriptions of photo-realistic scenes. We demonstrate that similar scan patterns are correlated with similar sentences, within and between visual scenes; and that this correlation holds for three phases of the language production process (target identification, sentence planning, and speaking). We also present a simple algorithm that uses scan patterns to accurately predict associated sentences by utilizing similarity-based retrieval.

Conference Proceedings

-

- M.I Coco, Brady, T., Merendino, G., Zappala’, G., Baddeley, A. and Della Sala, (2018), Forgetting in normal and pathological ageing as a function of semantic interference, Alzheimer’s and Dementia, 14(7), 915-916, DOI: 10.1016/j.jalz.2018.06.1179

- M., Borges and M.I. Coco (2015). Access and Use of Contextual Expectations in Visual Search during Aging in Proceedings of the EuroAsianPacic Joint Conference in Cognitive Science (EAP CogSci 2015), Torino, Italy. [pdf]

- E.G., Fernandes, M.A. Costa and M.I. Coco (2015). Bridging Mechanisms of Reading, Viewing and Working Memory during Attachment Resolution of Ambiguous Relative Clauses in Proceedings of the EuroAsianPacic Joint Conference in Cognitive Science (EAP CogSci 2015), Torino, Italy, [pdf]

- M.I. Coco and R. Dale (2015). Quantifying the Dynamics of Interpersonal Interaction: A Primer on Cross-Recurrence Quantication Analysis using R in Proceedings of the 37th Annual Conference of the Cognitive Science Society (CogSci 2015), Pasadena, USA, [pdf]

- M.I. Coco and N.D. Duran (2015). Incidental Memory for Naturalistic Scenes: Exposure, Semantics, and Encoding in Proceedings of the 37th Annual Conference of the Cognitive Science Society (CogSci 2015), Pasadena, USA [pdf]

- R. Dale, C. Yu, Y. Nagai, M.I. Coco and S. Kopp, (2013). Embodied Approaches to Interpersonal Coordination: Infants, Adults, Robots, and Agents. Workshop in Proceedings of the 35rd Annual Conference of the Cognitive Science Society (CogSci 2013), Berlin, Germany, 24-25, [pdf]

- M.I. Coco, M. Garraffa and H.P. Branigan, (2012). Subject Relative Production in SLI Children during Syntactic Priming and Sentence Repetition. In Proceedings of the 34rd Annual Conference of the Cognitive Science Society (CogSci 2012), 228{233, Sapporo, Japan, [pdf]

- B. Allison, F. Keller and M.I. Coco, (2012). A Bayesian Model of the Effect of Object Context on Visual Attention. In Proceedings of the 34rd Annual Conference of the Cognitive Science Society (CogSci 2012), 1278-1283, Sapporo, Japan, [pdf]

- M.I. Coco and F. Keller, (2011). Temporal Dynamics of Scan Patterns in Comprehension and Production. In Proceedings of the 33rd Annual Conference of the Cognitive Science Society (CogSci 2011), 2302-2307, Boston, USA, [pdf]

- M. Dziemianko, F. Keller and M.I. Coco, (2011). Incremental Learning of Target Locations in Visual Search. In Proceedings of the 33rd Annual Conference of the Cognitive Science Society (CogSci 2011), 1729-1734, Boston, USA, [pdf]

- M.I. Coco and F. Keller, (2010). Scan Pattern in Visual Scenes predict Sentence Production. In Proceedings of the 32th Annual Conference of the Cognitive Science Society (CogSci 2010), 1934-1939, Oregon, USA, [pdf]

- M.I.Coco and F. Keller (2010). Sentence Production in Naturalistic Scenes with Referential Ambiguity. In Proceedings of the 32th Annual Conference of the Cognitive Science Society (CogSci 2010), 1070-1075, Oregon, USA, [pdf]

- M.I.Coco and F. Keller (2009). The Impact of Visual Information on Reference Assignment in Sentence Production. In Proceedings of the 31th Annual Conference of the Cognitive Science Society (CogSci 2009), 274-279, Amsterdam, The Netherlands, 2009, [pdf]

- M.I.Coco (2009). The Statistical Challenge of Scan-path Analysis. In 2nd IEEE Conference of Human System Interactions, (HSI 2009) Catania, Italy, May, 2009, [pdf]

- ————————————————–

- M.I. Coco, S. Araujo, and K.M. Petersson (2017). Disentangling Stimulus Plausibility and Contextual Congruency: Electro-Physiological Evidence for Differential Cognitive Dynamics. Neuropsychologia, 96(2), 150–163. DOI. 10.1016/j.neuropsychologia.2016.12.008 [pdf]

- C. Souza, M.I. Coco, S. Pinho, C. N. Filipe, J. C. Carmo (2016). Contextual effects on visual short-term memory in high-functioning autism spectrum disorders. Research in Autism Spectrum Disorder, 32, 64-69. DOI: 10.1016/j.rasd.2016.09.003 8 [pdf].

- M.I. Coco, N. Duran (in press). When Expectancies Collide: Action Dynamics Reveal the Interaction Between Stimulus Plausibility and Congruency. Psychonomic Bulletin & Review. DOI: 10.3758/s13423-016-1022-9 [pdf]

- M. I. Coco, L. Badino, P. Cipresso, A. Chirico, E, Ferrari, G. Riva, A. Gaggioli, A. D’Ausilio (in press). Multiscale behavioral synchronization in a joint tower-building task, IEEE: TAMD, DOI: 10.1109/TCDS.2016.2545739 [doc].

- M.I. Coco, F. Keller, G.L. Malcolm (2016). Anticipation in Real-world Scenes: The Role of Visual Context and Visual Memory, Cognitive Science, 40 (8), 1995–2024. DOI:10.1111/cogs.12313 [pdf]

- M.I. Coco and F. Keller (2015). Integrating Mechanisms of Visual Guidance in Naturalistic Language Production, Cognitive Processing, 16(2), [pdf]

- M. Garraffa, M.I. Coco and H.P. Branigan (2015). Effects of Immediate and Cumulative Syntactic Experience in Language Impairment: Evidence from Priming of Subject Relatives in Children with SLI, Language Learning and Development, 11(1), 18-40, [pdf]

- M.I. Coco and F. Keller (2015). The Interaction of Visual and Linguistic Saliency during Syntactic Ambiguity Resolution, Quarterly Journal of Experimental Psychology, 68(1), 46-74, [pdf]

- M.I. Coco and R. Dale (2014). Cross-Recurrence Quantication Analysis of Categorical and Continuous Time-Series: an R-Package. Frontiers in Psychology, Quantitative Psychology and Measurement, 5:510, [pdf]

- M.I. Coco and F. Keller (2014). Classification of Visual and Linguistic Tasks using Eye-Movement Features. Journal of Vision, 14(3):11, [pdf][tutorial][data]

- M.I. Coco, G.L. Malcolm and F. Keller (2014). The Interplay of Bottom-Up and Top- Down Mechanisms in Visual Guidance during Object Naming, The Quarterly Journal of Experimental Psychology, 67(6):1096-1120, [pdf]

- A.D.F. Clarke, M.I. Coco and F. Keller (2013). The Impact of Attentional, Linguistic and Visual Features during Object Naming, Frontiers in Psychology, 4:927, [pdf]

- M.I. Coco and F. Keller, (2012). Scan Patterns Predict Sentence Production in the Cross- Modal Processing of Visual Scenes. Cognitive Science, 36(7), 1204-1223,

- M.I. Coco, A. Nuthmann and O. Dimigen (in press). Fixation-related brain potential during semantic integration of object-scene information. Journal of Cognitive Neuroscience. LINK

ABSTRACT

Your content goes here. Edit or remove this text inline or in the module Content settings. You can also style every aspect of this content in the module Design settings and even apply custom CSS to this text in the module Advanced settings.

PhD Thesis

M.I. Coco. Coordination of Vision and Language in Cross-Modal Referential Processing. School of Informatics, University of Edinburgh, 2011, [pdf]